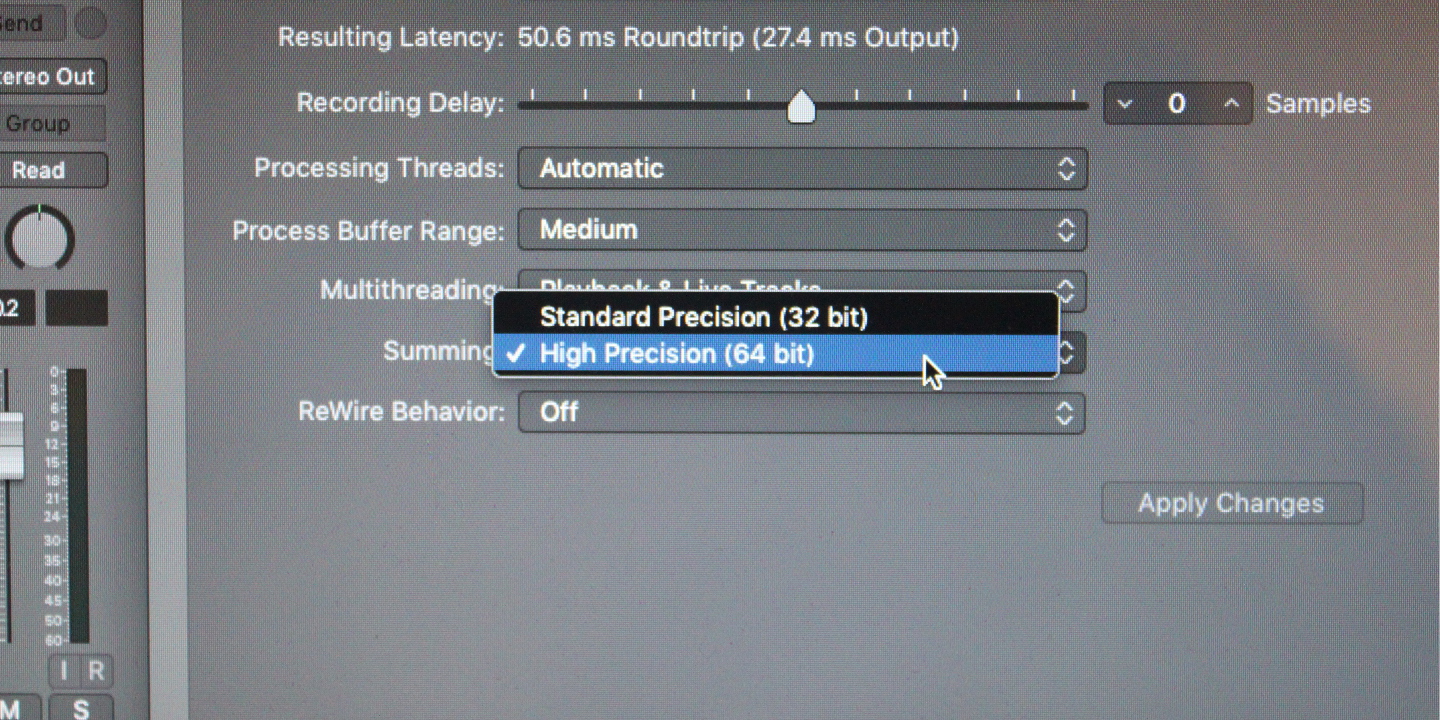

Logic X recently introduced a new feature: 64-bit summing. It’s not the first DAW to support 64-bit summing - Pro Tools HDX had it back in 2013. But how does it work and does it make a difference in practice?

To understand how 64-bit summing works, it helps to have a basic understanding of floating point numbers, what they are and why they’re not quite like “real” numbers. Then we’ll be in a better position to judge the difference that the extra bits have on summing.

Floating Point Numbers

Floating point numbers (“floats” from now on) are used to store and manipulate real numbers: numbers that may contain a fractional component (e.g., 1.0, 1.2, -3.14, etc.). They are useful because they can be added, subtracted, multiplied and divided very quickly by computers.

Floats generally come in two flavours: “single” and “double” precision. Single precision floats are 32-bits in length while “doubles” are 64-bits. Due to the finite size of floats, they cannot represent all of the real numbers - there are limitations on both their precision and range. For example, the (decimal) number 1.1 cannot be represented exactly by a single or double precision, binary float, so it is approximated.

Floats are composed of two elements: a significand (or mantissa) and an exponent. For example, a base-ten (decimal) float might look like this:

,

where the significand is 3.14 and the exponent is 2.

The “precision” of a float is determined by the number of bits used to represent the significand. The more bits, the more digits that can be stored. Similarly, the range is determined by the number of bits used to represent the exponent.

The following table shows how bits are allocated for single and double precision floats:

| Precision | Total bits | Significand digits (binary) | Exponent bits |

|---|---|---|---|

| Single | 32 | 24 | 8 |

| Double | 64 | 53 | 11 |

Floating Point Addition

To see how rounding affects floating point operations, let’s step through an example and add two numbers: and . For simplicity, we’ll work in base-ten and assume that our floats have two digits of precision.

The first step is to re-write each number with the same exponent:

Notice that the second number requires three digits of precision when re-written with an exponent of 1. In fact, this is OK because floats are added using a single “guard” digit. If this were not the case, the last digit (the one containing the 5) would be truncated.

Adding the two floats gives:

But our floats only have two digits of precision, so this value is rounded to:

(Note: you may have expected the result to be but that’s not how floating point rounding works. For digits that are “half-way,” like 0.5, we round to the nearest, even value. Since 0 is even and 1 is not, we round down. If we were rounding 1.5, we would round to 2 because 2 is even.)

Notice that is not the “correct” answer (i.e., ). After the rounding operation, the guard digit is discarded and the result only approximates the correct value. In fact, the error is so large that it is as if we didn’t even add the second number to the first!

Rounding errors can accumulate and become a significant problem. To illustrate this, imagine that we add to one-hundred times:

The correct answer is , but based on our previous example, we can show that the floating point answer will be , which is off by over 60%!

So, floating point calculations are susceptible to rounding errors and those errors can accumulate.

32-Bit vs. 64-Bit Summing

One way to achieve more accurate summing is to use a higher precision “accumulator.”

For example, if you have a set of single precision floats that you’d like to sum, using a 64-bit accumulator will yield a more accurate result. After the sum has been calculated, the 64-bit value can be rounded to a 32-bit value.

But how much does this affect the result? Can you actually hear the difference, or is it simply a marketing gimmick?

Let’s assume that you have a set of 32-bit sample values that lie in the range [-1, 1] (i.e., below 0 dBFS). In that range, the numbers with the greatest magnitude are -1 and 1. If we were to “naively” sum all of these numbers using a 32-bit accumulator, what would the maximum possible error be?

To answer that, first ask yourself: what is the maximum possible error at each step? In our “Floating Point Addition” example, we saw that the result of a single addition can be off by as much as half of the final digit’s place value. For a 32-bit float, this equals an error of .

Now, suppose that we have floats to sum, and each addition adds of error: the total error will be . If we have 32 tracks to sum, then and the total error is , or -114 dBFS.

That’s a loss of five bits of precision, which is not insignificant; however, it is important to remember that this is a contrived, worst-case scenario. We should expect the average error to be lower than this in practice.

Nevertheless, how does this compare with using a 64-bit accumulator?

With a 64-bit accumulator, the maximum error per addition is and accumulating this error 32 times yields an error of , or -289 dBFS, which is beyond the precision of a 32-bit float.

In other words, the final 32-bit float value would be as close to the actual value as it possibly could be, with no error introduced during the summation. For that reason alone, it seems like a no-brainer to use 64-bit summation, but how does the performance compare with 32-bit summation?

Is 64-Bit Summing Slow?

The answer, unfortunately, is: it depends… Modern CPUs/FPUs may process double precision floats just as fast (or faster) than single precision floats; however, “doubles” use twice as much memory, so they are not as cache-friendly. Whether or not double precision or single precision processing is faster will often depend on memory access patterns.

In the case of 64-bit summation, only the accumulator is 64-bits, while the sample values are 32-bits. For this reason, I would not expect there to be much of a “load” penalty (whether from the cache or main memory), if any.

Your best bet is to try enabling and disabling 64-bit summing while monitoring CPU usage.

Summary

I wouldn’t expect 64-bit summing to have any drastic effect on your sound quality. Even in worst-case scenarios, the effect is relatively minor. However, enabling 64-bit summing isn’t likely to incur a performance hit on modern computers, so there isn’t much of a reason not to enable it.